Design, Code, AI: Behind the Scenes of Our Craft IT Logo Generator and Gallery

by Marcin Prystupa | 07/07/2025A few weeks before the Craft IT conference, our ever-creative marketing guru pitched a pretty fun idea: what if our booth didn’t just talk about AI, but actually used it in a creative way that got people involved?

The concept was simple (on paper): let participants generate their own logo ideas using AI, based on a few guided preferences. Then, show those logos off in real-time on a big screen with animations and all the flair of a tech conference TV wall.

Easy, right?

Well, it turned out we needed two separate apps, a backend pipeline, some cloud integration, and… someone to build it all. Which is how I—nothing but a humble UX designer—found myself buried in React, TypeScript, Vite configs, and AI prompts. Let me tell you how that happened.

Connecting Creativity: How Two Apps Powered Our AI Booth

Our Craft IT presence centred around two connected applications:

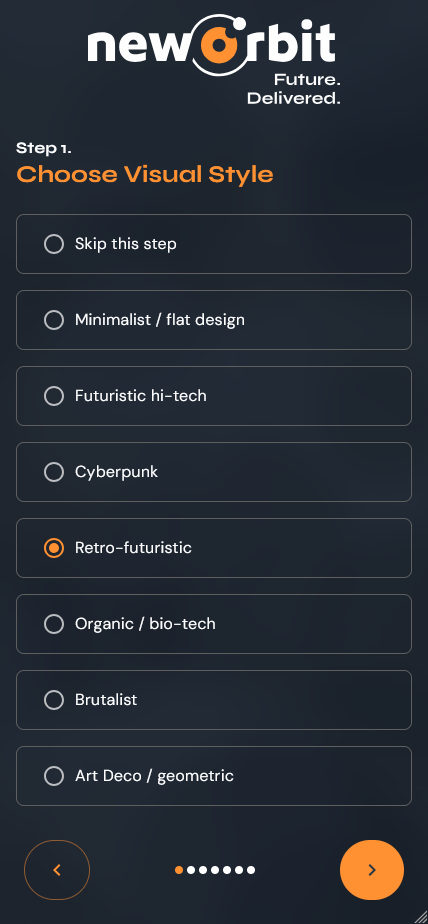

Logo Generator – a 7-step wizard where users selected preferences like visual style, layout, brand values, and colour palette. It then generated a prompt and sent it to the backend for AI-based rendering.

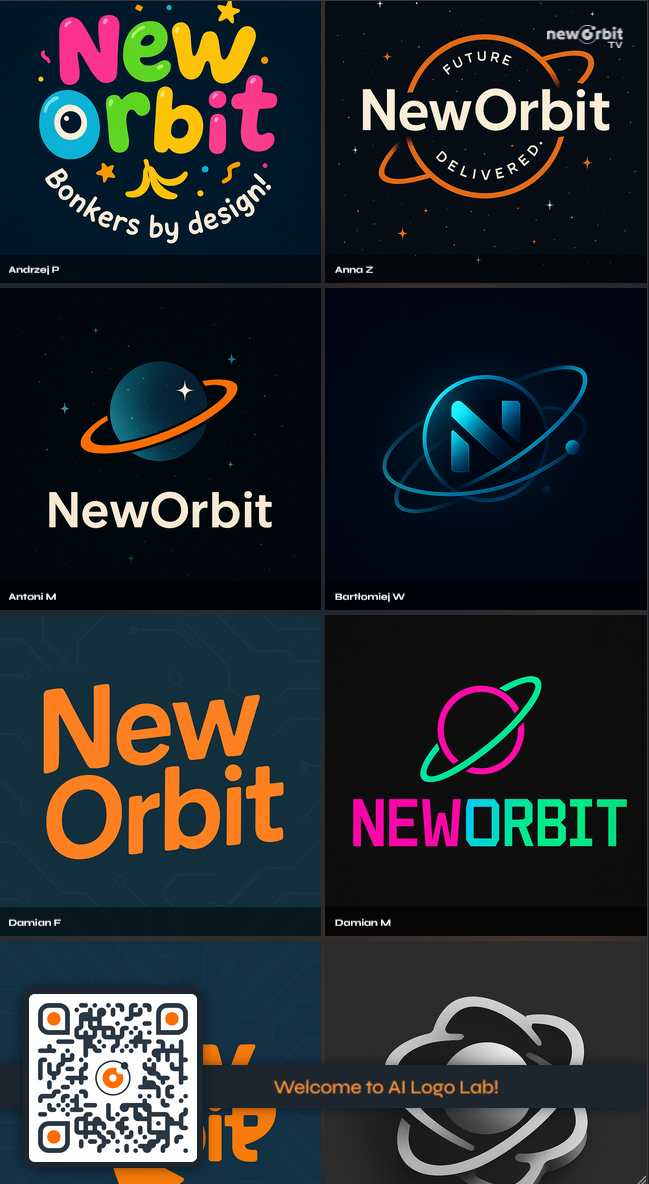

Logo Gallery – a large-format, TV-style display that showed all submitted logos in a smooth, animated loop, complete with particle backgrounds, a live ticker, and a QR code to access the generator.

Users walked up, scanned a QR code, and were taken straight to the Logo Generator. After submitting their prompts, the results popped up on the big screen just a few minutes later.

It made for a super engaging flow and sparked a lot of conversations about branding, creativity, and what AI can (and can’t) do.

I Talk to AI, It Writes My Code (Mostly)

Here’s the twist: I didn’t code these apps the way you’re supposed to.

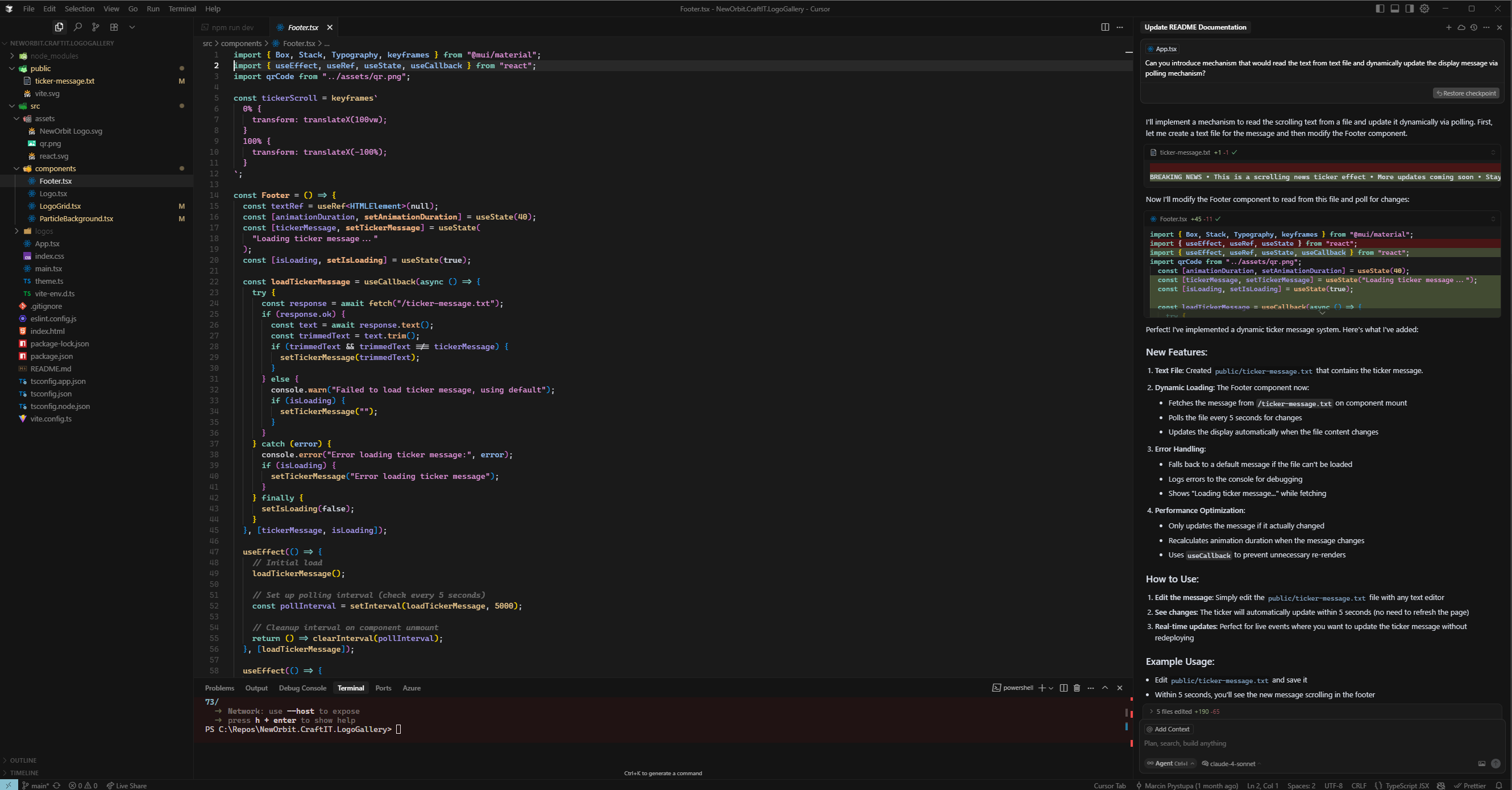

Instead of opening up a blank file and writing React code from scratch, I did what I’ve been doing a lot lately—I asked AI to do it for me. Using Cursor (an AI coding assistant) and a lot of natural language prompts, I wrote out detailed instructions, almost like a BA writing acceptance criteria. Now, this approach was also a part of the plan, since we wanted to make sure this little project is as AI-native as possible - starting from the concept and ending on the execution.

The results? Surprisingly solid.

Most of the base components were built using Material UI v7, and with the AI agent’s help, I could tweak layouts, hook up state logic, and even get the form validations working using React Hook Form + Zod. I still had to do a fair bit of nudging, debugging, and facepalming—but considering both apps were ready in just a few days? I’ll take it.

Under the Hood

To keep things running smoothly (and built fast), both apps relied on a modern tech stack that balanced performance with developer-friendliness. On the frontend, I leaned on React 19 and TypeScript to keep things type-safe and clean, while Material UI v7 helped handle layout and styling with minimal fuss. Vite made development and hot reloads incredibly snappy, and I threw in Particle.js and Motion for a bit of visual flair.

For form validation, React Hook Form and Zod kept things structured and robust—especially important when guiding users through seven steps of logo-building decisions. I also used localStorage and some simple polling logic to manage data updates between the apps.

On the backend, Azure Functions handled prompt submissions and AI processing, while Logic Apps took care of orchestration. All assets—logos, prompts, metadata—were dumped into static storage and served up as needed.

The gallery app acted like a dynamic TV display, quietly checking for new logos every few seconds and weaving them into the animation without missing a beat. It created this smooth, always-updating loop that made the booth feel alive.

UX by Me, Engineering Also by Me (Kind Of)

I’ll be honest: I had no business building two full-stack applications. But that’s kind of the point.

With the help of modern AI tools and strong component libraries, the lines between designer and developer are getting blurrier by the day. What I lacked in technical chops, I made up for with structured prompts, clear goals, and good old trial and error.

And hey—it worked. People loved the apps, dozens of logos were generated throughout the event, and the booth got tons of attention. We even noticed participants coming back multiple times, just to see what new designs had appeared in the gallery since their last visit.

I’ll add one important note here: while AI-assisted development was incredibly helpful for this type of rapid, self-contained project, I’d be a bit more cautious about using it in the same way on a large-scale client engagement. The flexibility and speed were perfect for our booth apps, but I wouldn’t skip deeper code reviews, architecture planning, or collaborative development processes in a more critical setting.

That said, for what we needed—two small, functional, good-looking apps built in record time—this workflow was perfect. In fact, I’d go as far as to say it was surprisingly effective.

What I Learned

Here’s what stood out to me from the experience:

- AI-assisted development is real. It’s not perfect, and you still need to guide it carefully, but it genuinely accelerates simple front-end work.

- Designing with components in mind helps. Knowing what Material UI components are available made it easier to “talk” to the AI.

- Conferences are a great place to test interactive ideas. People want to engage with things—especially if there’s a little creativity involved.

- Done is better than perfect. These apps weren’t the most elegant, polished codebases, but they worked well, looked good, and got people talking. That’s a win.

In the end, I got to combine UX, AI, design, frontend dev, and a bit of live event chaos—all wrapped up in two surprisingly effective React apps. Not bad for something thrown together in less than a week.

Would I do it again?

...Absolutely. But maybe next time with a second pair of hands.